In my last blog post I ranted about two-phase commit (2PC) and MSDTC. This is going to be a quick post about a few techniques that I use. The basic premise is that I break a two-phase commit apart into multiple transactions such that each is handled independently but with resiliency against failure scenarios.

There are a number of different methods and patterns that can be used to avoid 2PC and each method is different depending upon the application-level requirements. The easiest one to reason about is when de-queuing a message, processing it, and then saving it to durable storage. With MSDTC, the above is performed as a single transaction. There are a number of issues with this approached as outlined in my previous post.

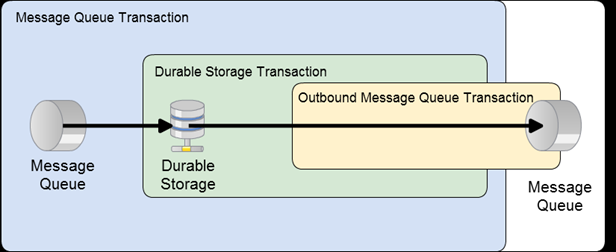

To avoid 2PC we must split the transaction apart and have one for the act of de-queuing the message and one for persisting to durable storage such as a relational database or even a NoSQL solution. The argument that is typically raised with this approach is that there is a possibility that the message dequeue may occur more than once thus raising the possibility of duplicate messages being processed and the results stored.

I have written extensively in my blog on the subject of message idempotency and the guarantees offered by messages queues of at-least-once delivery. To sum up these previous posts, message queues by definition deliver a message at least once. Cloud-based queues are even more fun because they have no concept of traditional, fully consistent transactions. One of the easiest ways to handle idempotency is to keep track of the message identifiers for previously processed messages and then to drop messages that have already been handled.

As an interesting aside, sagas are naturally idempotent because they can be implemented as a state machine. Let's imagine that we were modeling a message-controlled MP3 player. The user could dispatch "PushPlay" command 100 times and we could receive the message 1000 times (due to failure scenarios like network failures, etc.), but we would only transition to the "Playing" state once. Even though "PushPlay" isn't necessarily idempotent, the saga makes it so.

But what about a more complex scenario? What if we must receive a message and then publish? We can handle that by splitting out one more transaction. Okay, great, we've been able to split things apart using separate transactions, but we still have to deal with failure scenarios. For example, what happens if the message is received and written to durable storage (transactionally), but the outbound message dispatch fails? These are critical failure scenarios that must be explicitly handled.

In my applications I have things configured to where, once the application state has been written to durable storage, I then push the messages to be published onto the wire. If that fails, it's pretty simple to use a Circuit Breaker Pattern implementation to retry after a specified interval. It also really depends the type of queue that you're delivering to. For example, a local queue has much lower failure possibilities than a remote queue because the network has been removed from the picture—at least for delivery to the local queue.

In any case, if the message fails to dispatch because of power failure, etc., the durable storage has a list of messages it must dispatch when the application restarts. At startup, I scan that list and dispatch the set of undispatched messages. I then mark each message as dispatched in the database.

Interestingly enough, the above pattern is the exact once I use for my EventStore project. A message is received (be it from a queue or even an RPC call) and the resulting work is committed as a unit to some kind of persistent storage. Once successfully persisted, the EventStore hands off any resulting work in the form of messages to be dispatched to a "dispatcher". This dispatcher then pushes the messages onto the queue of your choice or can even make RPC calls on another thread. Once the message is considered dispatched, it is marked as such against the durable storage. In the event of a failure scenario the message is never marked as dispatched and during the next application restart, those messages are pushed onto the wire.

Conclusion

What's amazing to me about these patterns is how they enable choice. That's the bottom line for me. I want to choose the technologies that fit based upon the requirements of the application—not the requirements of the infrastructure. Infrastructure is there to serve the application, not the other way around. All too often we start our application design by taking different components in our infrastructure as a given, little realizing the profound effects and demands that these infrastructure components place upon our application code. This is one of the major factors behind vendor lock-in. But by switching things around, we can keep our infrastructure flexible with the premise that it exists only to serve the needs of the application. And when a more effective infrastructure component comes along, we can make the switch without significant effort.

![Nested Transactions_thumb[4] Nested Transactions_thumb[4]](https://blog.jonathanoliver.com/images/Nested20Transactions_thumb5B45D_thumb.png)